Why Quality Matters in ADAS Data Annotation for Vehicle Safety Systems

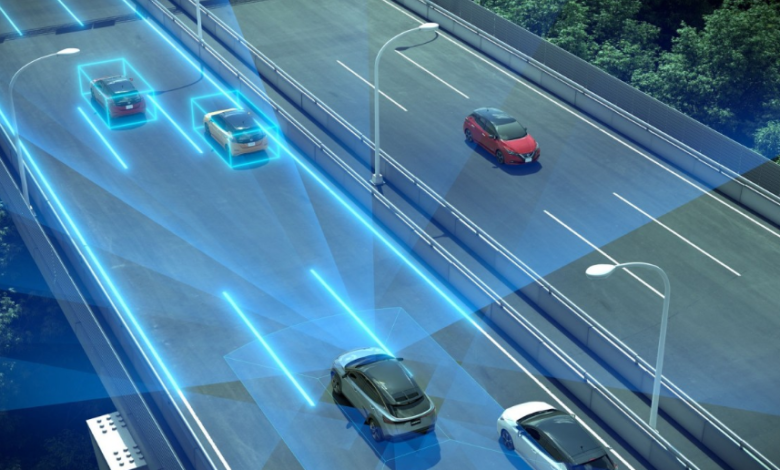

As vehicles advance toward autonomy, the responsibility for ensuring road safety increasingly shifts from human drivers to the systems embedded in the vehicle. Advanced Driver Assistance Systems (ADAS) are designed to aid drivers in navigation, hazard detection, and collision prevention. Central to the effectiveness of these systems is ADAS data annotation, the structured process of labeling visual and sensor-based data that enables machine learning algorithms to interpret the driving environment accurately.

Quality in annotation directly influences how well ADAS models can identify road signs, detect pedestrians, track lanes, and respond to unpredictable road scenarios. In applications where milliseconds determine outcomes, precision in data preparation is not optional; it is critical.

The Foundation of ADAS: Annotated Data

ADAS functions by learning from vast volumes of training data captured by in-vehicle sensors such as cameras, LIDAR, radar, and ultrasonic systems. Annotated datasets make it possible for these systems to distinguish between cars, cyclists, traffic signals, and road boundaries under various lighting and weather conditions.

High-quality annotations don’t just inform algorithms, they shape the system’s behavior in real-world scenarios. A model trained with mislabeled or inconsistent data can result in misclassifications that may compromise driver and passenger safety. This is especially dangerous in edge cases where the system must make split-second decisions.

Why Annotation Quality Cannot Be Compromised

1. Real-Time Safety Outcomes Depend on Precision

Annotation errors may seem minor in the dataset preparation phase, but they can cause critical failures during model deployment. For example, if a pedestrian is incorrectly labeled or not labeled at all, the system might fail to initiate braking in time. Accurate labeling ensures reliable object detection, classification, and response prediction.

2. Multi-Sensor Environments Require Consistency

ADAS models integrate data from multiple sources simultaneously. When combining data from LIDAR, cameras, and radar, annotations must be synchronized across all sensor modalities. Any inconsistency in the spatial or temporal labeling of this data introduces ambiguity, which weakens model predictions.

3. Diverse Scenarios Are Essential for Model Robustness

Models must be exposed to an extensive variety of driving environments to operate effectively. High-quality annotation ensures proper coverage of common situations and rare or complex edge cases, such as temporary road signs, construction zones, or low-light pedestrian crossings. Without a representative dataset, the model may underperform in less frequent but high-risk scenarios.

Leading Companies in ADAS Data Annotation

Several companies have built significant capabilities in delivering precise annotation for autonomous driving and ADAS:

- Scale AI: Known for providing sensor fusion annotation for autonomous vehicle applications.

- Digital Divide Data (DDD): Delivers ADAS-focused annotation with expertise in real-time safety systems, scenario simulation, and ROS-based development.

- iMerit: Combines technology with a skilled workforce to provide high-volume image and sensor annotation.

- Sama: Delivers high-quality labeled datasets while emphasizing ethical sourcing and impact-driven employment.

- Lionbridge AI: Specializes in multilingual and multi-sensor annotation projects across global environments.

These organizations play a crucial role in supporting the development of safe autonomous systems through rigorous annotation processes.

See also: Redefining Tech Hiring Efficiency with Technical Interview as a Service Solutions

Cross-Sector Relevance of ADAS Annotation

The principles applied in ADAS data annotation have practical implications beyond the automotive domain. In areas such as national security, surveillance, and aerospace, accurate annotation enables advanced recognition systems to operate effectively in mission-critical environments. The overlap between autonomous navigation and defense applications highlights the shared need for reliability and contextual accuracy in machine learning models. To understand how these techniques are deployed in strategic environments, explore insights on the Applications of Computer Vision in Defense.

The Role of Synthetic Data in Enhancing Annotation Quality

As the demand for comprehensive and diverse training data increases, synthetic data generation has emerged as a valuable solution. It enables the creation of labeled scenarios that are difficult or dangerous to capture in real life, such as low-visibility conditions or rare accident scenarios.

When used effectively, synthetic data supports model generalization and addresses dataset imbalance. However, producing high-quality synthetic data requires careful design to ensure it mirrors real-world physics and environments. Established frameworks help maintain the fidelity and applicability of synthetic datasets. Industry best practices are outlined in Best Practices for Synthetic Data Generation in Generative AI.

Conclusion

As advanced driver assistance systems evolve, the integrity of the data used to train them becomes increasingly vital. ADAS data annotation is not just a preparatory step; it is the core enabler of vehicle perception, prediction, and control. Whether for improving pedestrian detection or navigating complex intersections, the precision of each label directly contributes to how safe and responsive the vehicle becomes.

In a domain where the cost of error is measured in human lives, annotation quality must meet the highest standards. Organizations developing ADAS solutions must invest in annotation processes that are scalable, context-aware, and verified through consistent quality checks. This focus on accuracy will continue to define the success and safety of intelligent transport systems for years to come.